Digimagaz.com – Google is taking another step toward making artificial intelligence feel less generic and more personally useful. The company has begun rolling out a new beta feature for Gemini that allows users to connect select Google apps with a single tap, giving the AI assistant deeper context drawn from their own digital life.

The feature, called Personal Intelligence with Connected Apps, is launching in beta for eligible Google AI Pro and AI Ultra subscribers in the United States. While it may sound like a simple integration update, it signals a broader shift in how Google envisions the future of AI assistants: tools that do more than answer questions, and instead help users make decisions based on their habits, history, and preferences.

From general answers to personal context

Most AI assistants today rely on public information and generic patterns. Gemini’s new approach blends that baseline knowledge with user-approved access to apps like Gmail, Google Photos, YouTube, and Search. Once enabled, Gemini can reason across emails, images, and past activity to deliver answers that are tailored rather than one-size-fits-all.

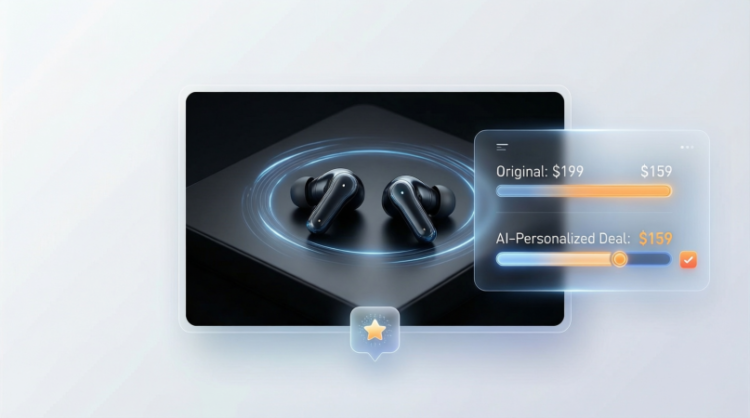

This matters because many real-world questions are personal by nature. Asking for a tire size, a flight detail, or a travel recommendation often requires digging through emails, photos, or past searches. Instead of forcing users to switch between apps, Gemini can now retrieve that information directly and combine it with broader context, such as product reviews or location data.

In practice, this allows Gemini to move beyond basic lookup tasks. It can suggest options, compare choices, and explain why a recommendation makes sense based on a user’s past behavior, not just popular trends.

Everyday use cases, not just demos

Google positions Personal Intelligence as a practical upgrade rather than a flashy feature. Early examples focus on everyday scenarios: pulling a license plate number from a photo, identifying a vehicle trim from an old email, or planning a family trip that avoids overcrowded attractions.

What stands out is the assistant’s ability to connect dots across different formats. A single request can involve text from Gmail, images from Photos, and contextual signals from Search. This multimodal reasoning is where Gemini aims to differentiate itself from competing assistants that still treat apps as mostly separate silos.

The same system can also refine recommendations over time. Book suggestions, clothing ideas, and entertainment picks are influenced by past choices and stored content, making the assistant feel more like a long-term helper than a search box with a personality.

Privacy controls remain central

With deeper personalization comes obvious privacy concerns, and Google is leaning heavily on user control to address them. Personal Intelligence is turned off by default. Users must explicitly enable it and choose which apps to connect. Each app can be disconnected at any time, and personalization can be disabled for individual conversations.

Google emphasizes that Gemini does not directly train its AI models on personal content from Gmail or Photos. Instead, that data is referenced in real time to generate responses. Model training relies on limited information, such as prompts and responses, after steps are taken to remove or obscure personal details.

Another notable feature is transparency. Gemini attempts to explain where its answers come from, allowing users to see whether information was pulled from an email, a photo, or general knowledge. If something looks wrong, users can ask for clarification or correct the assistant on the spot.

For more sensitive topics, Google says it has guardrails in place. Gemini avoids making assumptions about areas like health unless the user explicitly brings them up, a design choice aimed at reducing uncomfortable or inappropriate inferences.

Limitations and ongoing refinement

Despite the ambition, Google acknowledges the beta is far from perfect. Over-personalization remains a risk, especially when the assistant misreads patterns. Frequent photos at a golf course, for example, might lead Gemini to assume enthusiasm for the sport, even if the reality is more nuanced.

Timing and life changes also pose challenges. Shifts in relationships, interests, or routines may not be immediately reflected in the assistant’s understanding. Google is encouraging users to actively correct Gemini and provide feedback when responses miss the mark.

This feedback loop is central to the beta phase. User reports help identify gaps in reasoning, context awareness, and tone, all of which are still under active research and development.

Availability and what comes next

Access to Personal Intelligence with Connected Apps is rolling out gradually to eligible subscribers in the U.S. The feature works across web, Android, and iOS, and is compatible with all Gemini models available in the model picker. For now, it is limited to personal Google accounts and does not support Workspace, enterprise, or education users.

Google says broader expansion is planned, including availability in more countries and eventual access for free-tier users. The company also plans to bring the feature into AI Mode in Search, hinting at a future where personalized AI assistance is embedded directly into everyday search experiences.

For now, Gemini’s connected apps beta offers a glimpse into what AI looks like when it starts to understand not just the world, but the individual navigating it.